Render Farm Management Software

Posted : admin On 24.05.2020- Welcome to the Home of RenderPal V2, the Render Farm Manager. RenderPal V2 is a professional Render Farm Manager, dedicated to managing network rendering across small to large render farms.It offers unrivalled functionality and a wide range of features, delivering an enterprise-level solution for distributed rendering.

- Our machines are Xeon Dual Six Core 2.4Ghz 32GB RAM machines. There are no additional fees for renderers or plug-ins that are currently supported on the render farm. If don’t see the software that you are using on our supported section, then please email us at support@rendercore.com. View our quick overview tutorial to help you get started.

- Free Render Farm Management Software

- Render Farm Management Software Downloads

- Farm Management Accounting Software

This article provides guidance on extending your existing, on-premises renderfarm to use compute resources on Google Cloud Platform (GCP). Thearticle assumes that you have already implemented a render farm on-premises andare familiar with the basic concepts of visual effects (VFX) and animationpipelines, queue management software, and common software licensing methods.

Our RebusFarm 3.0 software checks and starts your render job from inside your 3D software. When finished, our render farm automatically saves your rendered frames to a local folder of your choice. Using AutoMode, our render farm will do the entire rendering process with just one click. PipelineFX Updates Qube! Render Farm Management Software - CGW explores how leading-edge graphics techniques, including the 3D modeling, animation and visualization are used in such applications as CAD/CAM/CAE, architecture, scientific visualization, special effects, digital video, film, and interactive entertainment.

Overview

Rendering 2D or 3D elements for animation, film, commercials, or video games isboth compute- and time-intensive. Rendering these elements requires a substantialinvestment in hardware and infrastructure along with a dedicated team of ITprofessionals to deploy and maintain hardware and software.

When an on-premises render farm is at 100-percent utilization, managing jobs canbecome a challenge. Task priorities and dependencies, restarting dropped frames,and network, disk, and CPU load all become part of the complex equation that youmust closely monitor and control, often under tight deadlines.

To manage these jobs, VFX facilities have incorporated queue management softwareinto their pipelines. Queue management software can:

- Deploy jobs to on-premises and cloud-based resources.

- Manage inter-job dependencies.

- Communicate with asset management systems.

- Provide users with a user interface and APIs for common languages such asPython.

While some queue management software can deploy jobs to cloud-based workers, youare still responsible for connecting to the cloud, synchronizing assets,choosing a storage framework, managing image templates, and providing your ownsoftware licensing.

Some small or medium-sized facilities that lack the technical resources toimplement a hybrid render farm can use Google's render farm service,Zync.To help determine the ideal solution for your facility, speak to your GCPrepresentative.

Note: Production notes appearperiodically throughout this article. These notes offer best practices tofollow as you build your render farm.

Connecting to the cloud

Depending on your workload, decide how your facility connects toGCP, whether through a partner ISP, a direct connection, orover the public internet.

Connecting over the internet

Without any special connectivity, you can connect to Google's network and useourend-to-end security modelby accessing GCP services over the internet. Utilities such asthe gcloudandgsutilcommand-line tools and resources such as theCompute Engine APIall use secure authentication, authorization, and encryption to help safeguardyour data.

Cloud VPN

No matter how you're connected, we recommend that you use a virtual privatenetwork (VPN) to secure your connection.

Cloud VPNhelps you securely connect your on-premises network to your GoogleVirtual Private Cloud (VPC) networkthrough an IPsec VPN connection. Data that is in transit gets encrypted beforeit passes through one or more VPN tunnels.

Learn how tocreate a VPNfor your project.

Customer-supplied VPN

Although you can set up your own VPN gateway to connect directly with Google, werecommend using Cloud VPN, which offers more flexibility and betterintegration with GCP.

Cloud Interconnect

Google supports multiple ways to connect your infrastructure toGCP. These enterprise-grade connections, known collectively asCloud Interconnect,offer higher availability and lower latency than standard internet connections,along with reduced egress pricing.

Dedicated Interconnect

Dedicated Interconnectprovides direct physical connections and RFC 1918 communication between youron-premises network and Google's network. It delivers connection capacity over thefollowing types of connections:

- One or more 10 Gbps Ethernet connections, with a maximum of eight connectionsor 80 Gbps total per interconnect.

- One or more 100 Gbps Ethernet connections, with a maximum of two connectionsor 200 Gbps total per interconnect.

Dedicated Interconnect traffic is not encrypted, however, so if you need totransmit data across Dedicated Interconnect in a secure manner, you mustestablish your own VPN connection. Cloud VPN is not compatible withDedicated Interconnect, so you must supply your own VPN in this case.

Partner Interconnect

Partner Interconnectprovides connectivity between your on-premises network and your VPC networkthrough a supported service provider. A Partner Interconnect connection isuseful if your infrastructure is in a physical location that can't reach aDedicated Interconnect colocation facility or if your data needs don't warrantan entire 10-Gbps connection.

Other connection types

Other ways to connect to Google might be available in your specific location.For help in determining the best and most cost-effective way to connect toGCP, speak to your GCP representative.

Securing your content

To run their content on any public cloud platform, content owners like majorHollywood studios require vendors to comply with security best practices thatare defined both internally and by organizations such as theMPAA.

Although each studio has slightly different requirements,Securing Rendering Workloadsprovides best practices for building a hybrid render farm. You can also findsecurity whitepapers andcompliance documentationatcloud.google.com/security.

If you have questions about the security compliance audit process, speak to yourGCP representative.

Organizing your projects

Projects are a core organizational component of GCP. Inyour facility, you can organize jobs under their own project or break themapart into multiple projects. For example, you might want to create separateprojects for the previsualization, research and development, and productionphases of a film.

Projects establish an isolation boundary for both network data and projectadministration. However, you can share networks across projects withShared VPC,which provides separate projects with access to common resources.

The media files you download with Mp3take must be for time shifting, personal, private, non commercial use only and must remove the files after listening.if you have found a link url to an illegal music file, please send mail to:then we will remove it in 1 - 2 business days. Disclaimer:All contents are copyrighted and owned by their respected owners. Download sunderkand mp3 by ashwin pathak. Mp3take is file search engine and does not host music files, no media files are indexed hosted cached or stored on our server, They are located on third party sites that are not obligated in anyway with our site, Mp3take is not responsible for third party website content. It is illegal for you to distribute or download copyrighted materials files without permission.

Production notes: Create a Shared VPC hostproject that contains resources with all your production tools. You candesignate all projects that are created under your organization asShared VPC service projects. This designation means that anyproject in your organization can access the same libraries, scripts, andsoftware that the host project provides.

The Organization resource

You can manage projects under an Organizationresource,which you might have established already.Migratingall your projects into an organization provides a number ofbenefits.

Production notes: Designate productionmanagers as owners of their individual projects and studio management asowners of the Organization resource.

Defining access to resources

Projects require secure access to resources coupled with restrictions on whereusers or services are permitted to operate. To help you define access,GCP offersCloud Identity and Access Management(Cloud IAM), which you can use to manage access control by definingwhich roles have what levels of access to which resources.

Production notes: To restrict users' accessto only the resources that are necessary to perform specific tasks based on theirrole, implement the principle of least privilegeboth on premises and in the cloud.

For example, consider a render worker, which is a virtual machine (VM) thatyou can deploy from a predefined instance template that uses your custom image.The render worker that is running under a service account can read fromCloud Storage and write to attached storage, such as a cloud fileror persistent disk. However, you don't need to add individual artists toGCP projects at all, because they don't need direct access tocloud resources.

You can assign roles to render wranglers or project administrators who haveaccess to all Compute Engine resources, which permits them to performfunctions on resources that are inaccessible to other users.

Define a policy to determine which roles can access which types of resourcesin your organization. The following table shows how typical production tasks mapto IAM roles in GCP.

| Production task | Role name | Resource type |

|---|---|---|

| Studio manager | resourcemanager.organizationAdmin | Organization Project |

| Production manager | owner, editor | Project |

| Render wrangler | compute.admin, iam.serviceAccountActor | Project |

| Queue management account | compute.admin, iam.serviceAccountActor | Organization Project |

| Individual artist | [no access] | Not applicable |

Access scopes

Access scopes offer you a way to control the permissions of a running instanceno matter who is logged in. You can specify scopes when you create an instanceyourself or when your queue management software deploys resources from aninstance template.

Scopes take precedence over the IAM permissions of an individual user or serviceaccount. This precedence means that an access scope can prevent a project adminfrom logging in to an instance to delete a storage bucket or change a firewallsetting.

Production notes: By default, instances canread but not write to Cloud Storage. If your render pipeline writesfinished renders back to Cloud Storage, add the scopedevstorage.read_write to your instance at the time of creation.

Choosing how to deploy resources

With cloud rendering, you can use resources only when needed, but you can choosefrom a number of ways to make resources available to your render farm.

Deploy on demand

For optimal resource usage, you can choose to deploy render workers only whenyou send a job to the render farm. You can deploy many VMs to be shared acrossall frames in a job, or even create one VM per frame.

Your queue management system can monitor running instances, which can berequeued if a VM is preempted, and terminated when individual tasks arecompleted.

Deploy a pool of resources

You can also choose to deploy a group of instances, unrelated to any specificjob, that your on-premises queue management system can access as additionalresources. While less cost-effective than an on-demand strategy, a group ofrunning instances can accept multiple jobs per VM, using all cores andmaximizing resource usage. This approach might be the most straightforwardstrategy to implement because it mimics how an on-premises render farm ispopulated with jobs.

Licensing the software

Third-party software licensing can vary widely from package to package. Here aresome of the licensing schemes and models that you might encounter in a VFXpipeline. For each scheme, the third column shows the recommended licensingapproach.

| Scheme | Description | Recommendation |

|---|---|---|

| Node locked | Licensed to a specific MAC address, IP address, or CPU ID. Can be run onlyby a single process. | Instance based |

| Node based | Licensed to a specific node (instance). An arbitrary number of users orprocesses can run on a licensed node. | Instance based |

| Floating | Checked out from a license server that keeps track of usage. | License server |

| Software licensing | ||

| Interactive | Allows user to run software interactively in a graphics-basedenvironment. | License server or instance based |

| Batch | Allows user to run software only in a command-line environment. | License server |

| Cloud-based licensing | ||

| Usage based | Checked out only when a process runs on a cloud instance. When the processfinishes or terminates, the license is released. | Cloud-based license server |

| Uptime based | Checked out while an instance is active and running. When the instance isstopped or deleted, the license is released. | Cloud-based license server |

Using instance-based licensing

Some software programs or plugins are licensed directly to the hardware on whichthey run. This approach to licensing can present a problem in the cloud, wherehardware identifiers such as MAC or IP addresses are assigned dynamically.

MAC addresses

When they are created, instances are assigned a MAC address that is retained solong as the instance is not deleted. You canstop or restartan instance, and the MAC address will be retained. You can use this MAC addressfor license creation and validation until the instance is deleted.

Assigning a static IP address

When you create an instance, it is assigned an internal and, optionally, anexternal IP address. To retain an instance's external IP address, you canreserve a static IP addressand assign it to your instance. This IP address will be reserved only for thisinstance. Because static IP addresses are a project-based resource, they aresubject toregional quotas.

You can also assign aninternal IP addresswhen you create an instance, which is helpful if you want the internal IPaddresses of a group of instances to fall within the same range.

Hardware dongles

Older software might still be licensed through a dongle, a hardware key that isprogrammed with a product license. Most software companies have stopped usinghardware dongles, but some users might have legacy software that is keyed to oneof these devices. If you encounter this problem, speak to the softwaremanufacturer to see if they can provide you with an updated license for yourparticular software.

If the software manufacturer cannot provide such a license, you could implementa network-attached USB hub orUSB over IPsolution.

Using a license server

Most modern software offers a floating license option. This option makes themost sense in a cloud environment, but it requires stronger license managementand access control to prevent overconsumption of a limited number of licenses.

To help avoid exceeding your license capacity, you can as part of your job queueprocess choose which licenses to use and control the number of jobs that uselicenses.

On-premises license server

You can use your existing, on-premises license server to provide licenses toinstances that are running in the cloud. If you choose this method, you mustprovide a way for your render workers to communicate with your on-premisesnetwork, either through a VPN or some other secure connection.

Cloud-based license server

In the cloud, you can run a license server that serves instances in your projector even across projects by usingShared VPC.Floating licenses are sometimes linked to a hardware MAC address, so a small,long-running instance with a static IP address can easily serve licenses to manyrender instances.

Hybrid license server

Some software can use multiple license servers in a prioritized order. Forexample, a renderer might query the number of licenses that are available froman on-premises server, and if none are available, use a cloud-based licenseserver. This strategy can help maximize your use of permanent licenses beforeyou check out other license types.

Production notes: Define one or more licenseservers in an environment variable and define the order of priority; AutodeskArnold, a popular renderer, helps you do this. If the job cannot acquire alicense by using the first server, the job tries to use any other servers thatare listed, as in the following example:

In the preceding example, the Arnold renderer tries to obtain a license from theserver at x.x.0.1, port 5053. If that attempt fails, it then tries to obtaina license from the same port at the IP address x.x.0.2.

Cloud-based licensing

Some vendors offer cloud-based licensing that provides software licenses ondemand for your instances. Cloud-based licensing is generally billed in twoways: usage based and uptime based.

Usage-based licensing

Usage-based licensing is billed based on how much time the software is in use.Typically with this type of licensing, a license is checked out from acloud-based server when the process starts and is released when the processcompletes. So long as a license is checked out, you are billed for the use ofthat license. This type of licensing is typically used for rendering software.

Uptime-based licensing

Uptime-based or metered licenses are billed based on the uptime of your ComputeEngine instance. The instance is configured to register with the cloud-basedlicense server during thestartup process.So long as the instance is running, the license is checked out. When theinstance is stopped or deleted, the license is released. This type of licensingis typically used for render workers that a queue manager deploys.

Important: Third-party software licenses might have different requirements foruse on a public cloud, so consider working with your corporate counsel todetermine if your licenses are compatible with that cloud.Choosing how to store your data

The type ofstoragethat you choose on Google Cloud Platform depends on your chosenstorage strategyalong with factors such as durability requirements and cost. To learn more aboutCloud Storage, seeFile Servers on Compute Engine.

Persistent disk

You might be able to avoid implementing a file server altogether byincorporatingpersistent disks(PDs) into your workload. PDs are a type of POSIX-compliant block storage, up to64 TB in size, that are familiar to most VFX facilities. Persistent disks areavailable as both standard drives and solid-state drives (SSD). You can attacha PD in read-write mode to a single instance, or in read-only mode to a largenumber of instances, such as a group of render workers.

| Pros | Cons | Ideal use case |

|---|---|---|

| Mounts as a standard NFS or SMB volume. Can dynamically resize. Up to 128 PDs can be attached to a single instance. The same PD can be mounted as read-only on hundreds or thousands ofinstances. | Maximum size of 64 TB. Can write to PD only when attached to a single instance. Can be accessed only by resources that are in the same region. | Advanced pipelines that can build a new disk on a per-job basis. Pipelines that serve infrequently updated data, such as software orcommon libraries, to render workers. |

Object storage

Cloud Storageis highly redundant, highly durable storage that, unlike traditional filesystems, is unstructured and practically unlimited in capacity. Files onCloud Storage are stored in buckets, which are similar to folders,and are accessible worldwide.

Unlike traditional storage, object storage cannot be mounted as a logical volumeby an operating system (OS). If you decide to incorporate object storage intoyour render pipeline, you must modify the way that you read and write data,either through command-line utilities such asgsutilor through theCloud Storage API.

| Pros | Cons | Ideal use case |

|---|---|---|

| Durable, highly available storage for files of all sizes. Single API across storage classes. Inexpensive. Data is available worldwide. Virtually unlimited capacity. | Not POSIX-compliant. Must be accessed through API or command-line utility. In a render pipeline, data must be transferred locally beforeuse. | Render pipelines with an asset management system that can publish data toCloud Storage. Render pipelines with a queue management system that can fetch datafrom Cloud Storage before rendering. |

Other storage products

Other storage products are available as managed services, through third-partychannels such as theGCP Marketplace,or as open source projects through software repositories or GitHub.

| Product | Pros | Cons | Ideal use case |

|---|---|---|---|

| AverevFXT | High-performing and configurable. Read-through caching helps minimize latency for hybrid workflows. Can sync back to on-premises storage with FXT appliance. | Cost, third-party support from Avere. | Medium to large VFX facilities with hundreds of TBs of data to present onthe cloud. |

| Elastifile, Cloud File System(ECFS) [Acquired by Google Cloud] | Clustered file system that can support thousands of simultaneous NFSconnections. Able to synchronize with on-premises NAS cluster. | Although on-premises storage or cloud synchronization is available, datacan sync only in a single direction. For example, on-premises NAS can readand write, but cloud-based ECFS is read only. No way to selectively sync files. No bidirectional sync. | Medium to large VFX facilities with hundreds of TBs of data to present onthe cloud. |

| Pixit Media, PixCacheCloud | Scale-out file system that can support thousands of simultaneous NFS orPOSIX clients. Data can be cached on demand from on-premises NAS, withupdates automatically sent back to on-premises storage. | Cost, third-party support from Pixit. | Medium to large VFX facilities with hundreds of TBs of data to present onthe cloud. |

| Cloud Filestore(Beta) | Fully managed storage solution on GCP. Simple to deploy and maintain. | Maximum 64 TB per instance. NFS performance is fixed and does not scalewith the number of active clients. | Small-to-medium VFX facilities with a pipeline capable of assetsynchronization. Shared disk across virtual workstations. |

| Cloud StorageFUSE | Mount Cloud Storage buckets as file systems. Low cost. | Not a POSIX-compliant file system. Can be difficult to configure andoptimize. | VFX facilities that are capable of deploying, configuring, and maintainingan open source file system, with a pipeline that is capable of assetsynchronization. |

Other storage types are available on GCP. For more information,speak to your GCP representative.

Further reading on data storage options

Implementing storage strategies

You can implement a number of storage strategies in VFX or animation productionpipelines by establishing conventions that determine how to handle your data,whether you access the data directly from your on-premises storage orsynchronize between on-premises storage and the cloud.

Strategy 1: Mount on-premises storage directly

If your facility has connectivity to GCP of at least 10 Gbpsand is in close proximity to a GCPregion,you can choose to mount your on-premises NAS directly on cloud render workers.While this strategy is straightforward, it can also be cost- and bandwidth-intensive, because anything that you create on the cloud and write back tostorage is counted as egress data.

| Pros | Cons | Ideal use case |

|---|---|---|

| Straightforward implementation. Read/write to common storage. Immediate availability of data, no caching or synchronizationnecessary. | Can be more expensive than other options. Close proximity to a Google data center is necessary to achieve lowlatency. The maximum number of instances that you can connect to youron-premises NAS depends on your bandwidth and connection type. | Facilities near a Google data center that need to burst render workloads tothe cloud, where cost is not a concern. Facilities with connectivity to GCP of at least 10 Gbps. |

Strategy 2: Synchronize on demand

You can choose topushdata to the cloud orpulldata from on-premises storage, or vice versa, only when data is needed, such aswhen a frame is rendered or an asset is published. If you use this strategy,synchronization can be triggered by a mechanism in your pipeline such as a watchscript, by an event handler such asCloud Pub/Sub,or by a set of commands as part of a job script.

You can perform a synchronization by using avariety of commands,such as the gcloudscpcommand, the gsutilrsynccommand, orUDP-based data transfer protocols(UDT). If you choose to use a third-party UDT such asAspera,Tervela Cloud FastPath,BitSpeed,orFDTto communicate with a Cloud Storage bucket, refer to the thirdparty's documentation to learn about their security model and best practices.Google does not manage these third-party services.

Push method

You typically use the push method when you publish an asset, place a file in awatch folder, or complete a render job, after which time you push it to apredefined location.

Examples:

- A cloud render worker completes a render job, and the resulting framesare pushed back to on-premises storage.

- An artist publishes an asset. Part of the asset-publishing processinvolves pushing the associated data to a predefined path onCloud Storage.

Pull method

You use the pull method when a file is requested, typically by a cloud-basedrender instance.

Example: As part of a render job script, all assets that are needed torender a scene are pulled into a file system before rendering, where all renderworkers can access them.

| Pros | Cons | Ideal use case |

|---|---|---|

| Complete control over which data is synchronized and when. Ability to choose transfer method and protocol. | Your production pipeline must be capable of event handling to triggerpush/pull synchronizations. Additional resources might be necessary to handle the synchronizationqueue. | Small to large facilities that have custom pipelines and want completecontrol over asset synchronization. |

Production notes: Manage datasynchronization with the same queue management system that you use to handlerender jobs. Synchronization tasks can use separate cloud resources to maximizeavailable bandwidth and minimize network traffic.

Strategy 3: On-premises storage, cloud-based read-through cache

In this strategy, you implement a virtual caching appliance such as Avere vFXTin the cloud to act as both a read-through cache and a file server. Each cloudrender worker mounts the caching appliance under the NFS or SMB protocol as itwould with a conventional file server. If a render worker reads a file thatisn't present in the cache, the file is transferred from on-premises storage tothe cloud filer. Depending on how you've configured your caching file server,the data remains in the cache until:

- The data ages out, or remains untouched for a specified amount of time.

- Space is needed on the file server, at which time data is removed fromthe cache based on age.

This strategy reduces the amount of bandwidth and complexity required to deploymany concurrent render instances.

In some cases, you might want to pre-warm your cache to ensure that alljob-related data is present before rendering. To pre-warm the cache, read thecontents of a directory that is on your cloud file server by performing a reador stat of one or more files. Accessing files in this way triggers thesynchronization mechanism.

You can also add a physical on-premises appliance to communicate with thevirtual appliance. For example, Avere offers an FXT physicalstorage appliancethat can further reduce latency between your on-premises storage and thecloud.

| Pros | Cons | Ideal use case |

|---|---|---|

| Cached data is managed automatically. Reduces bandwidth requirements. Clustered cloud file systems can be scaled up or down depending on jobrequirements. | Can incur additional costs. Pre-job tasks must be implemented if you choose to pre-warm thecache. | Large facilities that deploy many concurrent instances and read commonassets across many jobs. |

Filtering data

You can build a database of asset types and associated conditions to definewhether to synchronize a particular type of data. You might never want tosynchronize some types of data, such as ephemeral data that is generated as partof a conversion process, cache files, or simulation data. Consider also whetherto synchronize unapproved assets, because not all iterations will be used infinal renders.

Performing an initial bulk transfer

When implementing your hybrid render farm, you might want toperform an initial transferof all or part of your dataset to Cloud Storage, persistent disk, orother cloud-based storage. Depending on factors such as the amount and type ofdata to transfer and your connection speed, you might be able to perform a fullsynchronization over the course of a few days or weeks. The following figurecompares typical times for online and physical transfers.

If your transfer workload exceeds your time or bandwidth constraints, Googleoffers a number oftransfer optionsto get your data into the cloud, including Google'sTransfer Appliance.

Archiving and disaster recovery

It's worth noting the difference between archiving of data and disasterrecovery. The former is a selective copy of finished work, while the latter is astate of data that can be recovered. You want todesign a disaster recovery planthat fits your facility's needs and provides an off-site contingency plan.Consult with your on-premises storage vendor for help with a disaster recoveryplan that suits your specific storage platform.

Archiving data in the cloud

After a project is complete, it is common practice to save finished work to someform of long-term storage, typically magnetic tape media such asLTO.These cartridges are subject to environmental requirements and, over time, canbe logistically challenging to manage. Large production facilities sometimeshouse their entire archive in a purpose-built room with a full-time archivist tokeep track of data and retrieve it when requested.

Searching for specific archived assets, shots, or footage can be time-consuming,because data might be stored on multiple cartridges, archive indexing might bemissing or incomplete, or there might be speed limitations on reading data frommagnetic tape.

Migrating your data archive to the cloud can not only eliminate the need foron-premises management and storage of archive media, but it can also make yourdata far more accessible and searchable than traditional archive methods can.

A basic archiving pipeline might look like the following diagram, employingdifferent cloud services to examine, categorize, tag, and organize archives.From the cloud, you cancreatean archive management and retrieval tool to search for data by using variousmetadata criteria such as date, project, format, or resolution. You can also usethe Machine Learning APIs to tag and categorize images and videos,storing the resultsin a cloud-based database such as BigQuery.

Further topics to consider:

- Automate the generation of thumbnails or proxies for content thatresides on Cloud Storage Nearline or Cloud Storage Coldline tiers. Use theseproxies within your media asset management system so that users can browsedata while reading only the proxies, not the archived assets.

- Consider using machine learning to categorize live-action content. Use theCloud Visionto label textures and background plates, or theCloud Video Intelligence to help with the search and retrieval of reference footage.

- You can also useCloud AutoML Visionto create a custom vision model to recognize any asset, whether live actionorrendered.

- For rendered content, consider saving a copy of the render worker's diskimage along with the rendered asset. If you need to re-create the setup,you will have the correct software versions, plugins, OS libraries, anddependencies available if you need to re-render an archived shot.

Managing assets and production

Working on the same project across multiple facilities can present uniquechallenges, especially when content and assets need to be available around theworld. Manually synchronizing data across private networks can be expensive andresource-intensive, and is subject to local bandwidth limitations.

If your workload requires globally available data, you might be able to useCloud Storage,which is accessible from anywhere that you can access Google services. Toincorporate Cloud Storage into your pipeline, you must modify yourpipeline to understand object paths, and then pull or push your data to yourrender workers before rendering. Using this method provides global access topublished data but requires your pipeline to deliver assets to where they'reneeded in a reasonable amount of time.

For example, a texture artist in Los Angeles can publish image files to be usedby a lighting artist in London. The process looks like this:

- The publish pipeline pushes files to Cloud Storage and adds anentry to a cloud-based asset database.

- An artist in London runs a script to gather assets for a scene. Filelocations are queried from the database and read fromCloud Storage to local disk.

- Queue management software gathers a list of assets that are required forrendering, queries them from the asset database, and downloads them fromCloud Storage to each render worker's local storage.

Using Cloud Storage in this manner also provides you with an archiveof all your published data on the cloud if you choose to useCloud Storage as part of your archive pipeline.

Managing databases

Asset and production management software depends on highly available, durabledatabases that are served on hosts capable of handling hundreds or thousands ofqueries per second. Databases are typically hosted on an on-premises server thatis running in the same rack as render workers, and are subject to the samepower, network, and HVAC limitations.

You might consider running your MySQL, NoSQL, and PostgreSQL productiondatabases as managed, cloud-based services. These services are highly availableand globally accessible, encrypt data both at rest and in transit, and offerbuilt-in replication functionality.

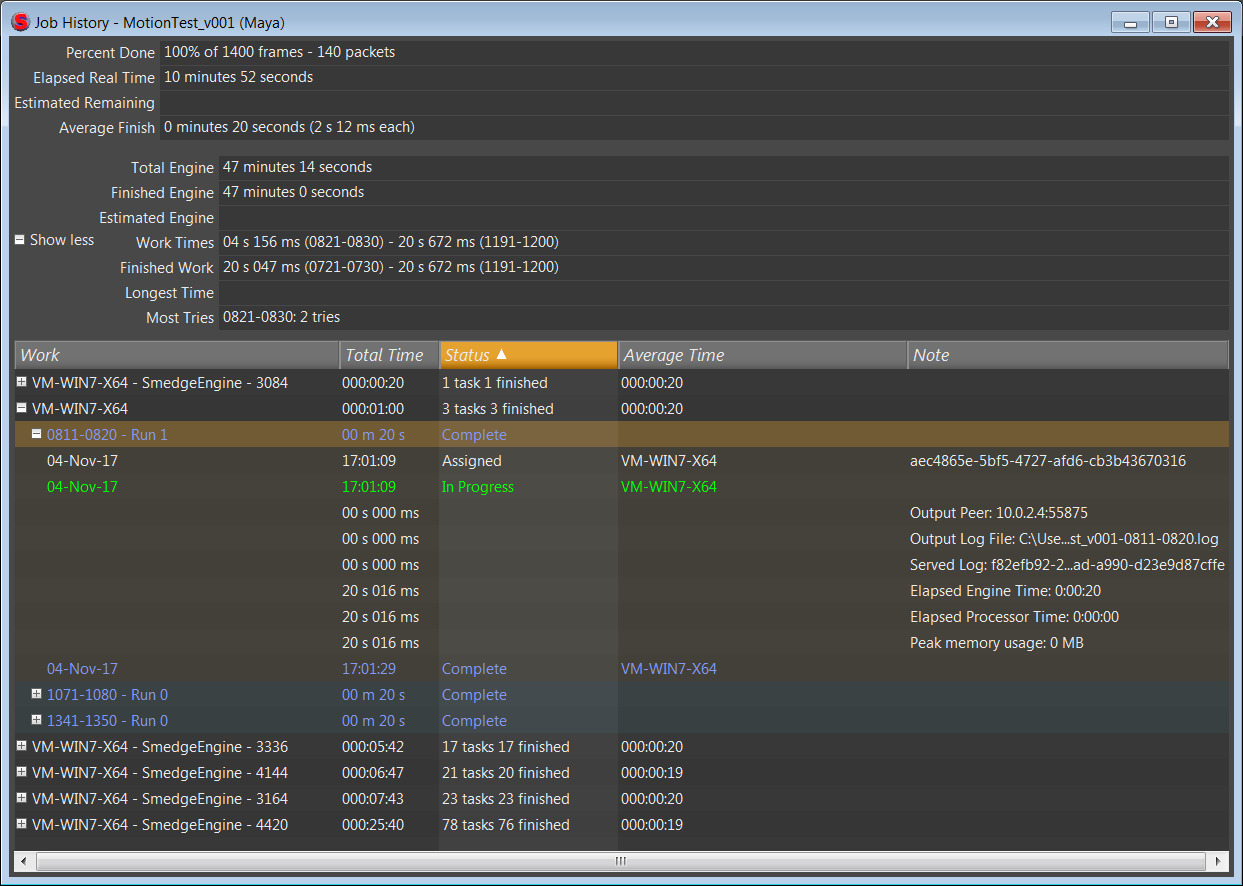

Managing queues

Commercially available queue management software programs such asQube!,Deadline,andTractorare widely used in the VFX/animation industry. There are also open sourcesoftware options available, such asOpenCue.You can use this software todeploy and manage any compute workload across a variety of workers, not justrenders. You can deploy and manage asset publishing, particle and fluidsimulations, texture baking, and compositing with the same scheduling frameworkthat you use to manage renders.

A few facilities have implemented general-purpose scheduling software such asHTCondorfrom the University of Wisconsin,Slurmfrom SchedMD, orUniva Grid Engineinto their VFX pipelines. Software that is designed specifically for the VFXindustry, however, pays special attention to features like the following:

- Job-, frame-, and layer-based dependency. Some tasks need to becompleted before you can begin other jobs. For example, run a fluidsimulation in its entirety before rendering.

- Job priority, which render wranglers can use to shift the order of jobsbased on individual deadlines and schedules.

- Resource types, labels, or targets, which you can use to match specificresources with jobs that require them. For example, deploy GPU-acceleratedrenders only on VMs that have GPUs attached.

- Capturing historical data on resource usage and making it availablethrough an API or dashboard for further analysis. For example, look ataverage CPU and memory usage for the last few iterations of a render topredict resource usage for the next iteration.

- Pre- and post-flight jobs. For example, a pre-flight job pulls allnecessary assets onto the local render worker before rendering. Apost-flight job copies the resulting rendered frame to a designatedlocation on a file system and then marks the frame as complete in an assetmanagement system.

- Integration with popular 2D and 3D software applications such as Maya,3ds Max, Houdini, Cinema 4D, or Nuke.

Production notes: Use queue managementsoftware to recognize apool of cloud-based resources as if theywere on-premises render workers. This method requires some oversight to maximizeresource usage by running as many renders as each instance can handle, atechnique known as bin packing. These operations are typically handled bothalgorithmically and by render wranglers.

You can also automate the creation, management, and termination of cloud-basedresources on anon-demandbasis. This method relies on your queue manager to run pre- and post-renderscripts that create resources as needed, monitor them during rendering, andterminate them when tasks are done.

Job deployment considerations

When you are implementing a render farm that uses both on-premises andcloud-based storage, here are some considerations that your queue manager mightneed to keep in mind:

- Licensing might differ between cloud and on-premises deployments. Somelicenses are node based, some are process driven. Ensure that your queuemanagement software deploys jobs to maximize the value of your licenses.

- Consider adding unique tags or labels to cloud-based resources to ensurethat they get used only when assigned to specific job types.

- UseStackdriver Loggingto detect unused or idle instances.

- When launching dependent jobs, consider where the resulting data willreside and where it needs to be for the next step.

- If your path namespaces differ between on-premises and cloud-basedstorage, consider using relative paths to allow renders to be locationagnostic. Alternatively, depending on the platform, you could build amechanism to swap paths at render time.

- Some renders, simulations, or post-processes rely on random numbergeneration, which can differ among CPU manufacturers. Even CPUs from thesame manufacturer but different chip generations can produce differentresults. When in doubt, use identical or similar CPU types for all framesof a job.

- If you are using a read-through cache appliance such as an Avere vFXT,consider deploying a pre-flight job to pre-warm the cache and ensure thatall assets are available on the cloud before you deploy cloud resources.This approach minimizes the amount of time that render workers are forcedto wait while assets are moved to the cloud.

Logging and monitoring

Recording and monitoring resource usage and performance is an essential aspectof any render farm. GCP offers a number of APIs, tools, andsolutions to help provide insight into utilization of resources and services.

The quickest way to monitor a VM's activity is to view itsserial port output.This output can be helpful when an instance is unresponsive through typicalservice control planes such as your render queue management supervisor.

Other ways to collect and monitor resource usage on GCPinclude:

- UseStackdriver Loggingto capture usage and audit logs, and to export the resulting logs toCloud Storage, BigQuery, and other services.

- UseStackdriver Monitoringto install an agent on your VMs to monitor systemmetrics.

- Incorporate theStackdriver Logging APIinto your pipeline scripts to log directly to Stackdriver by usingclient librariesfor popular scripting languages. Seethis VFX pipeline solutionfor more information.

- Use Stackdriver to createchartsand customdashboardsto understand resource usage.

Configuring your render worker instances

For your workload to be truly hybrid, on-premises render nodes must be identicalto cloud-based render nodes, with matching OS versions, kernel builds, installedlibraries, and software. You might also need to reproduce mount points, pathnamespaces, and even user environments on the cloud, because they are onpremises.

Choosing a disk image

You can choose from one of thepublic imagesor create your owncustom imagethat is based on your on-premises render node image. Public images include acollection ofpackagesthat set up and manageuser accountsand enable Secure Shell (SSH) key–based authentication.

Free Render Farm Management Software

Creating a custom image

If you choose to create a custom image, you will need to add more libraries toboth Linux and Windows for them to function properly in theCompute Engine environment.

Your custom image must comply with securitybest practices.If you are using Linux, install theLinux guest environment for Compute Engineto access the functionality that public images provide by default. By installingthe guest environment, you can perform tasks, such as metadata access, systemconfiguration, and optimizing the OS for use on GCP, by usingthe same security controls on your custom image that you use on public images.

Production notes: Manage your custom imagesin a separate project at the organization level. This approach gives you moreprecise control over how images are created or modified and allows you to applyversions,which can be useful when using different software or OS versions across multipleproductions.

Automating image creation and instance deployment

You can use tools such asPackerto make creating images more reproducible, auditable, configurable, andreliable. You can also use a tool likeAnsibleto configure your running render nodes and exercise fine-grained control overtheir configuration and lifecycle.

For more information about how to automate image creation and instanceconfiguration, see theAutomated Image Builds with Jenkins, Packer, and KubernetesandCompute Engine Management with Puppet, Chef, Salt, and Ansiblesolutions.

Choosing a machine type

On GCP, you can choose one of thepredefined machine typesor specify acustom machine type.Using custom machine types gives you control over resources so you can customizeinstances based on the job types that you run on GCP. Whencreating an instance, you can add GPUs and specify the number of CPUs, the CPUplatform, the amount of RAM, and the type and size of disks.

Production notes: For pipelines that deployone instance per frame, consider customizing the instance based on historicaljob statistics like CPU load or memory use to optimize resource usage across allframes of a shot. For example, you might choose to deploy machines with higherCPU counts for frames that contain heavy motion blur to help normalize rendertimes across all frames.

Choosing between standard and preemptible VMs

Preemptible VMs(PVMs) refers to excess Compute Engine capacity that is sold at a muchlower pricethan standard VMs. Compute Engine might terminate or preempt theseinstances if other tasks require access to that capacity. PVMs are ideal forrendering workloads that are fault tolerant and managed by a queueing systemthat keeps track of jobs that are lost to preemption.

Standard VMs can be run indefinitely and are ideal for license servers or queueadministrator hosts that need to run in a persistent fashion.

Preemptible VMs are terminated automatically after 24 hours, so don't use themto run renders or simulations that run longer.

Preemption rates run from 5% to 15%, which for typical rendering workloads isprobably tolerable given the reduced cost. Some preemptiblebest practicescan help you decide the best way to integrate PVMs into your render pipeline. Ifyour instance is preempted, Compute Engine sends apreemption signalto the instance, which you can use to trigger your scheduler to terminate thecurrent job and requeue.

| Standard VM | Preemptible VM |

|---|---|

| Can be used for long-running jobs. Ideal for high-priority jobs with hard deadlines. Can be run indefinitely, so ideal for license servers or queueadministrator hosting. | Automatically terminated after 24 hours. Requires a queue management system to handle preempted instances. |

Production notes: Some renderers can performa snapshot of an in-progress render at specified intervals, so if the VM getspreempted, you can pause and resume rendering without having to restart a framefrom scratch. If your renderer supports snapshotting, and you choose to usePVMs, enable render snapshotting in your pipeline to avoid losing work. Whilesnapshots are being written and updated, data can be written toCloud Storage and, if the render worker gets preempted, retrievedwhen a new PVM is deployed. To avoid storage costs, delete snapshot data forcompleted renders.

Granting access to render workers

Cloud IAM helps you assign access to cloud resources to individualswho need access. For Linux render workers, you can useOS Loginto further restrict access within an SSH session, giving you control over who isan admin.

Controlling costs of a hybrid render farm

When estimating costs, you must consider many factors, but we recommend that youimplement these common best practices as policy for your hybrid render farm:

- Use preemptible instances by default. Unless your render job isextremely long-running, four or more hours per frame, or you have a harddeadline to deliver a shot, use preemptible VMs.

- Minimize egress. Copy only the data that you need back to on-premisesstorage. In most cases, this data will be the final rendered frames, but itcan also be separate passes or simulation data. If you are mounting youron-premises NAS directly, or using a storage product such as Avere vFXTthat synchronizes automatically, write all rendered data to the renderworker's local file system, then copy what you need back to on-premisesstorage to avoid egressing temporary and unnecessary data.

- Right-size VMs. Make sure to create your render workers with optimalresource usage, assigning only the necessary number of vCPUs, the optimumamount of RAM, and the correct number of GPUs, if any. Also consider how tominimize the size of any attached disks.

- Consider the one-minute minimum. On GCP, instances getbilled on a per-second basis with a one-minute minimum. If your workloadincludes rendering frames that take less than one minute, consider chunkingtasks together to avoid deploying an instance for less than one minute ofcompute time.

- Keep large datasets on the cloud. If you use your render farm togenerate massive amounts of data, such as deep EXRs or simulation data,consider using a cloud-based workstation that is further down the pipeline.For example, an FX artist might run a fluid simulation on the cloud,writing cache files to cloud-based storage. A lighting artist could thenaccess this simulation data from a virtual workstation that is onGCP. For more information about virtual workstations, speakto your GCP representative.

- Take advantage of sustained and committed use discounts. If you run apool of resources,sustained use discountscan save you up to 30% off the cost of instances that run for an entiremonth.Committed use discountscan also make sense in some cases.

Comparing costs of on-premises and cloud-based render farms

Compare the cost of building and maintaining an on-premises render farm againstbuilding a cloud-based render farm. The following example cost breakdowncompares the two scenarios (all costs in USD).

| On-premises render farm costs | Cloud-based render farm costs |

|---|---|

| Initial build-out costs Price per node: $3,800 Number of nodes: 100 Networking hardware, clean-room build: $100,000 Storage hardware: $127,000 Initial utility company hookup: $20,000 Provisioning connectivity: $2,000 Total build-out costs: $629,000 | Initial connectivity costs Networking hardware: $10,000 Storage hardware: $127,000 Provisioning connectivity: $2,000 Total build-out costs: $139,000 |

| Annual costs Networking support contract: $15,000 Server support contract: $34,050 | Annual costs Networking support contract: $1,500 Server support contract: $19,050 |

| Monthly costs Bandwidth: $2,500 Utilities: $8,000 Cost per sq. ft.: $40 Sq. ft. required: 400 IT staffing/support: $15,000 Total monthly costs: $41,500 | Monthly costs Bandwidth: $2,500 2x Dedicated Interconnect: $3,600 100 GB egress: $12 Total monthly costs: $6,112 |

| Render farm utilization Percentage monthly utilization: 50% Number of render hours/month: 36,500 | Render farm utilization Number of instances: 100 Machine type: n1-standard-32, preemptible Percentage monthly utilization: 50% Number of render hours/month: 36,500 |

| Cost per render hour: $5.62 | Cost per render hour: $1.46 |

Summary

Extending your existing render farm into the cloud is a cost-effective way toleverage powerful, low-cost resources without capital expense. No two productionpipelines are alike, so no document can cover every topic and uniquerequirement. For help with migrating your render workloads to the cloud, speakto your GCP representative.

Render Farm Management Software Downloads

Additional reading

Other applicable solutions, some of which have been mentioned in this article,are available oncloud.google.com.

Farm Management Accounting Software

Try out other Google Cloud Platform features for yourself. Have a look at ourtutorials.